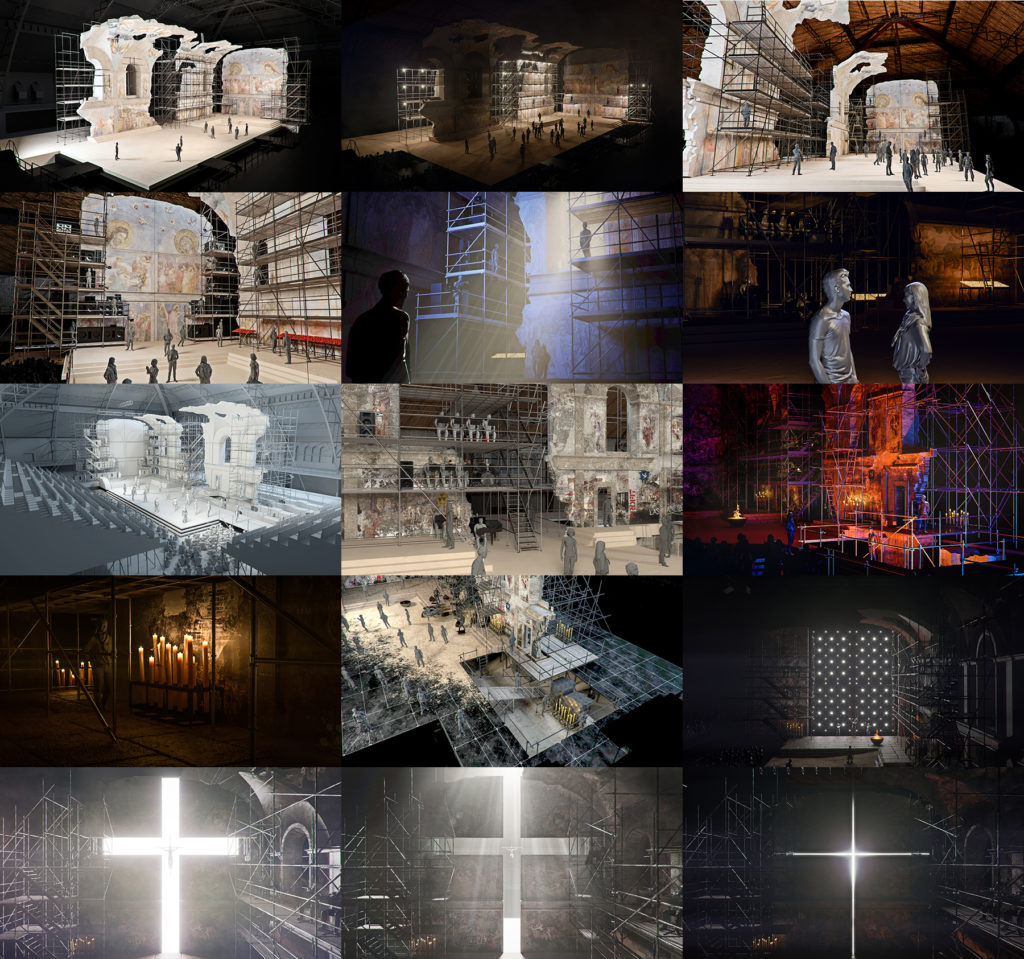

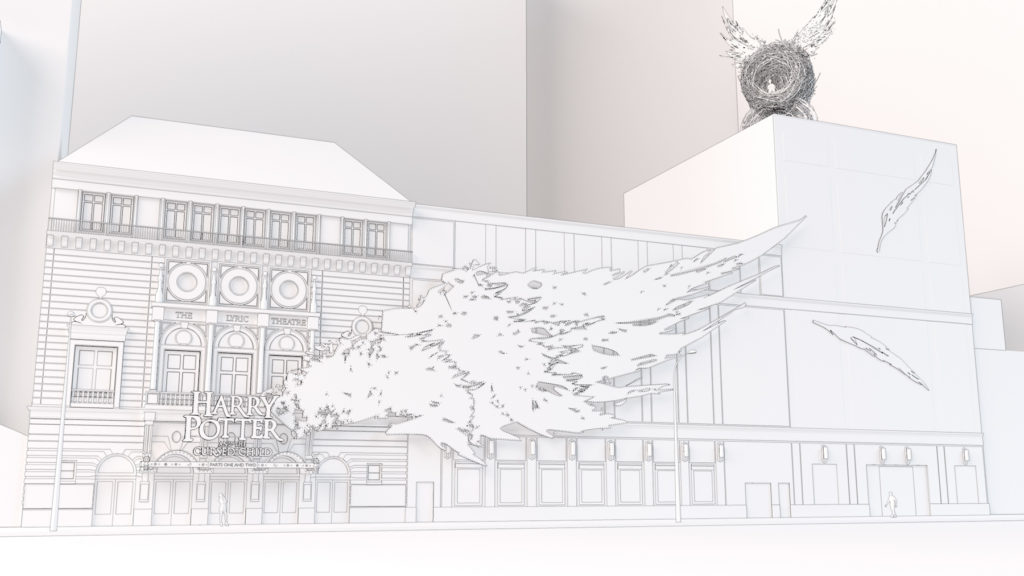

Evan Alexander is a visualizer for stage shows, concerts and live TV events, and has used Corona Renderer to help create the designs for Harry Potter and the Cursed Child in New York, Jesus Christ Superstar Live for NBC, and Sam Smith’s ‘The Thrill of It All” tour, and more.

In this article, he shares background about his career, how his tools and workflow have evolved over the years, and how Corona Renderer for Cinema 4D has become indispensable in his work, where fast-turnaround of changes and accurate lighting are critical.

Read about how Evan uses Corona Renderer for stage and set design!

My name is Evan Alexander, and I’m a visualizer for stage shows, concerts and live television events. I specialize in large-scale and touring productions.

Career and History

My training and background is in Scenic Design for theater and opera: I have an MFA in set design from the University of Washington. I worked for many years in New York on Broadway shows, building (physical) models and hand drafting: computers were only used for occasional Photoshop work.

I started playing with Flash as a way of photographing the designer’s models and animating them. It was pretty crude and led me to learn Director, which was much more robust and let us animate layered Photoshop files in a cleaner way. All of this could probably be done on an iPad now, but at the time it was new and something people hadn’t seen before in theater.

Eventually I found my way into the event world as a Production designer at MTV and started exploring SketchUp, which was my first foray into 3D. Over the next few years I moved around to a few different design firms and my interest in 3D kept growing and becoming more important to my workflow. I would build in SketchUp and then take that into Photoshop and do a ton of post work on top of it.

Vectorworks is the CAD software that we use and when they added 3D capabilities, I started playing with that and seeing where it could go. SketchUp was great for building out the forms and masses, but so much of the work I do is about lighting: I needed a more powerful solution.

In 2007 I was hired to design a touring exhibit for the Discovery Network and I just made up my mind to do it all in Vectorworks 3D – modeling, textures, and lighting, the whole thing. It was painfully slow, but it impressed the clients, even with hard, ray-traced shadows everywhere.

Soon after, a colleague mentioned Cinema 4D to me and said it talked to Vectorworks well. I downloaded the demo and never looked back! It has been my go-to DCC ever since. The flexibility and ease of the program, on top of incredible stability makes it an amazing tool to have in your arsenal.

In 2008 I went full freelance, and after many years of designing and growing my reliance on 3D, I realized that I was getting more joy out of the renderings then I was from the design and design process.

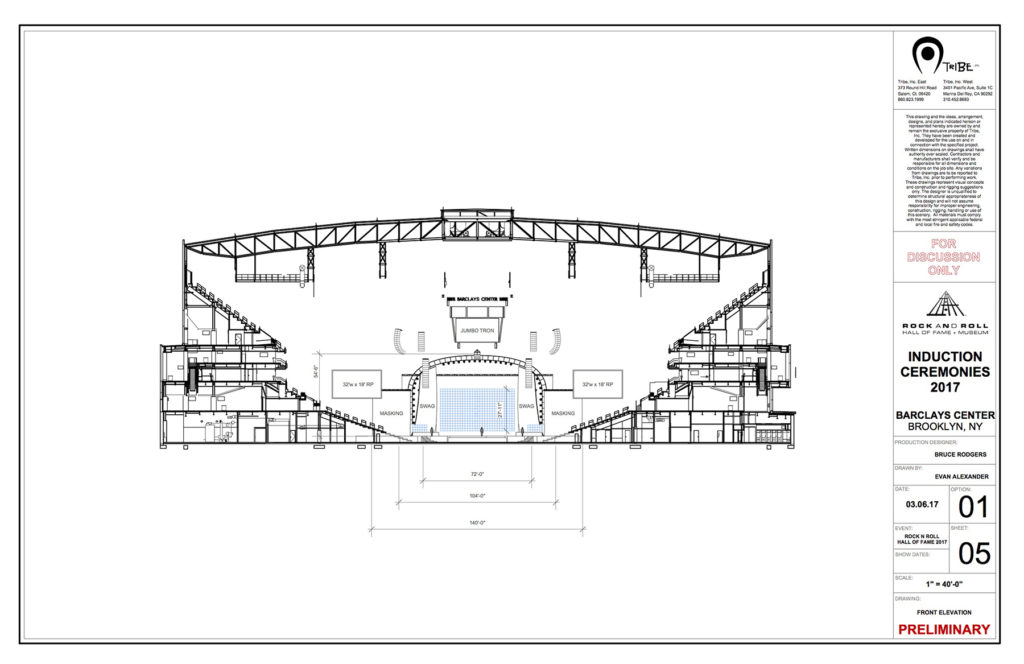

I made the decision to stop designing and to only support other designers with visuals, and I eventually found myself connected with Bruce Rodgers of Tribe, Inc. a firm that designs really large scale concerts and TV specials.

Bruce took a chance on me and we just immediately jumped into working out the design for the Super Bowl Half Time show with Bruno Mars. I was freaked out, but I guess it went okay since this started a 5 year collaboration with Tribe where I was able to create a system and workflow for design renderings and CAD with multiple revisions that was efficient and met the needs of all sides of production for events of that scale.

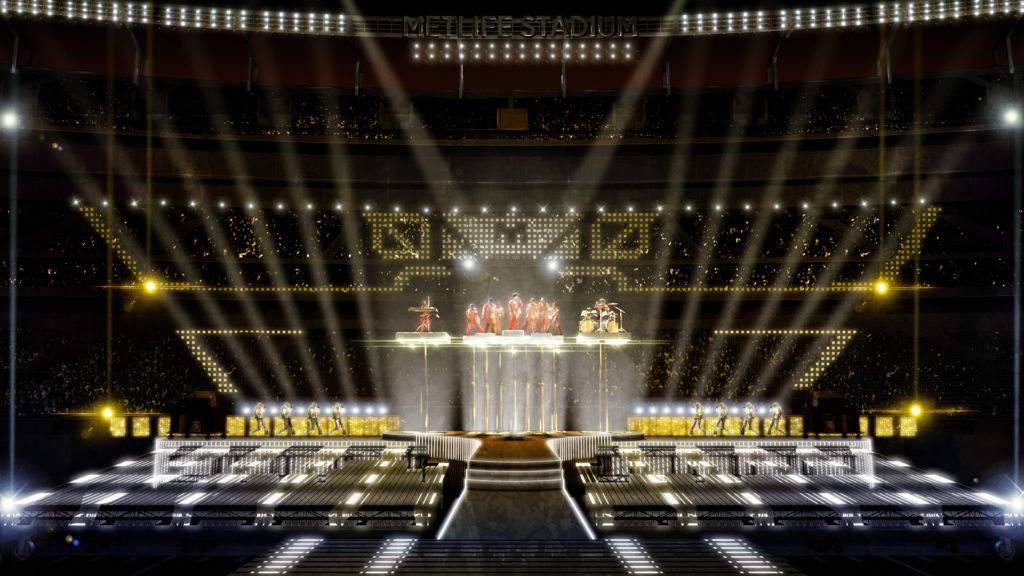

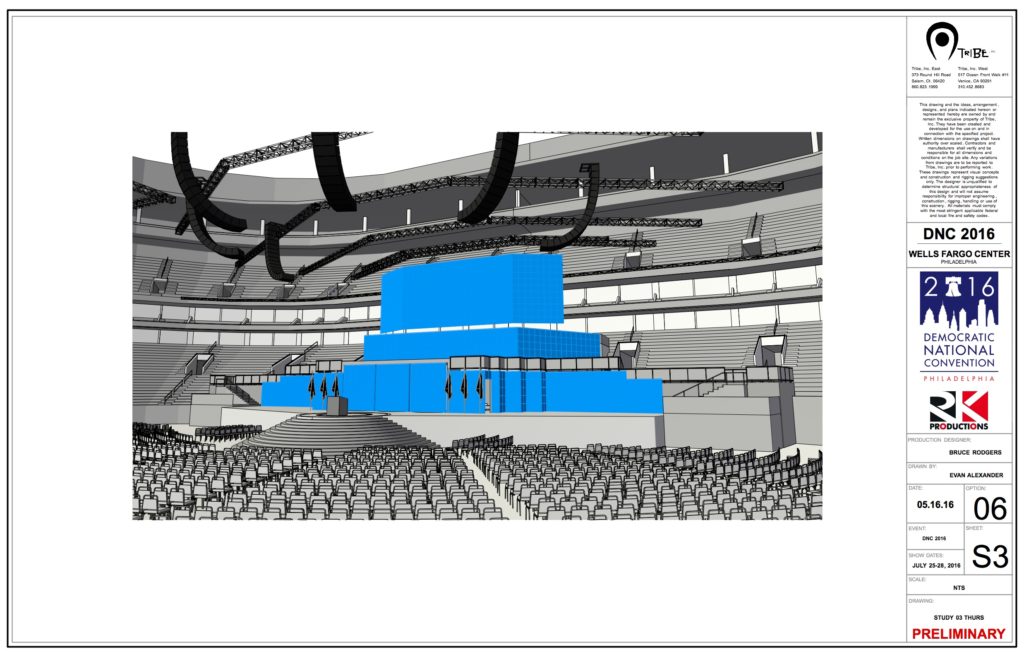

Since then, I have been lucky to collaborate with many designers on a wide variety of projects including four Super Bowl Half Time Shows (Bruno Mars, Katy Perry, Coldplay, Lady Gaga), The 2016 Democratic National Convention, Eminem & Rihanna’s Monster Tour, and more recently, Sam Smith’s The Thrill of It All tour, Harry Potter and the Cursed Child in New York, and Jesus Christ Superstar Live for NBC.

The Nature of the Job

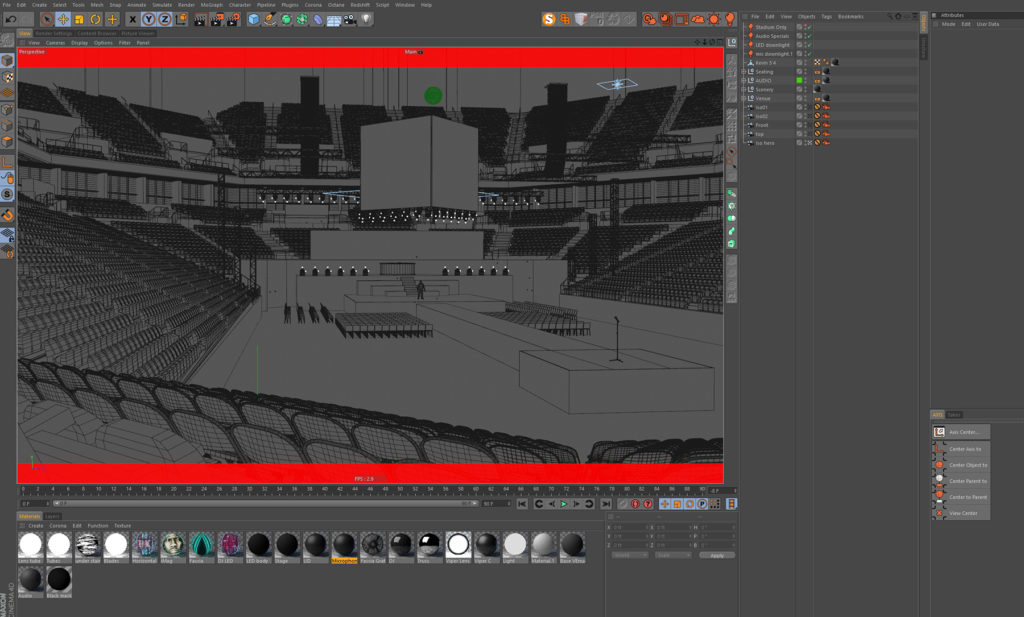

3D work for the stage or camera is a bit different than for Motion Graphics or Arch Viz. I am usually responsible for both the designer’s CADs and for the renderings – so to make this work efficiently, I model everything in Vectorworks. This way, I have one master model that I can turn into dimensioned elevations, and that I can also push to Cinema 4D for textures, lighting, and camera work.

The 3D CADs are used for designer meetings and budgeting, and are the basis for what the fabricators use to build their construction and engineering drawings. Audio and lighting also use the scenic layers of these drawings for their respective plots.

Usually, on large shows, all departments collectively feed info into and use these drawings in meetings and for logistic discussions. It’s an important part of the process and after a while of keeping CAD separate from the visual 3D model, I realized that I need them to be one and the same to save time – especially for revisions which happen daily over the course of a few months.

I kept refining this workflow and built up a good library of textures and models to pull from with Cinema 4D being the core of the rendering side. I would get the model prepared and do some basic lighting, just to get shadows right, and I would give everything a 50% grey texture and then render that out with Object Buffers on everything (similar idea to an Object or Material ID pass) and then do most of the lighting and texture work in Photoshop.

At the time, this was the fastest way I could get the work out. I didn’t have the turnaround to do a million test renders or to wait for final renders to cook for hours and hours, as it’s not uncommon for me to have only one or two days to put together a basic CAD package and a color rendering on a project.

The physical renderer in Cinema is great, but I found it to be too slow for what I needed. Certain GPU engines were really coming into their own at that time, so I jumped on that train: I put a Titan X into my Mac cheese grater tower and started playing with those. I found that the instant feedback and the idea of an IPR was a game changer for me, especially with lighting, and there was no way to go back to tweak, render, wait, sucks, tweak, render, wait, still sucks, tweak, render…

The problem I faced at that time was that the GPU engines couldn’t efficiently handle the amount of geometry that I was throwing at them on my specific hardware. The transfer times were tough and I crashed a lot when trying to put through a stadium with thousands of instances of lights, bodies, speakers, truss, etc.

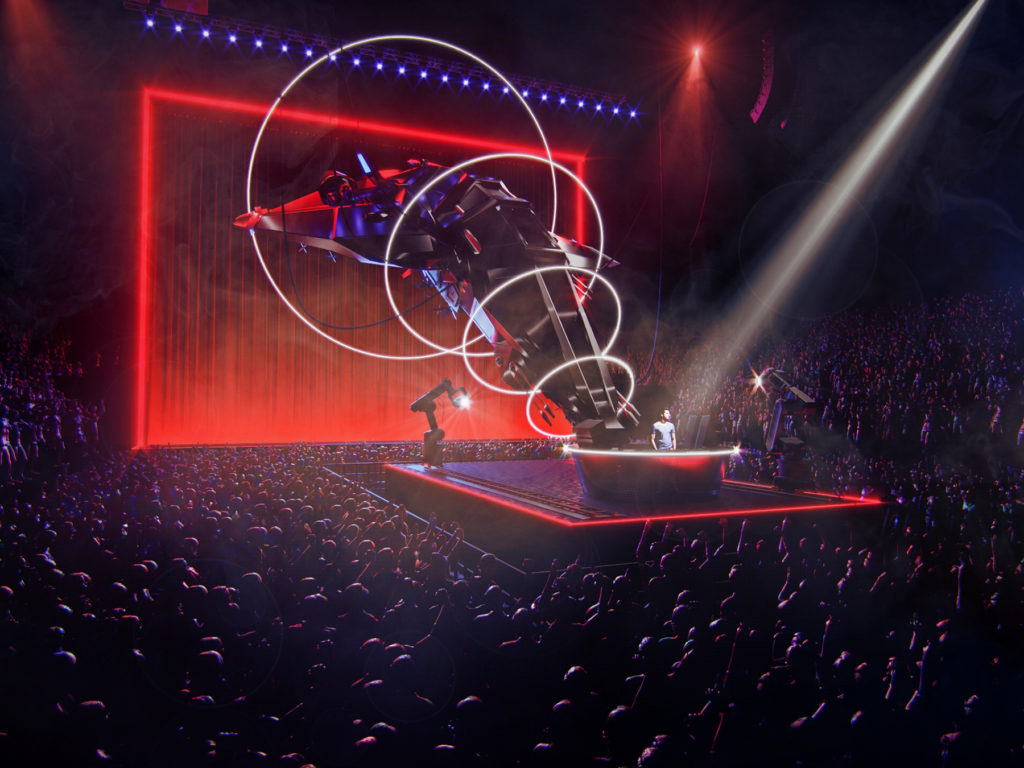

I wanted to see how much I could actually do in 3D to cut down on my post processing time and to get better lighting and reflections. I was tired of painting everything and I wanted to go back to having 3D do the heavy lifting.

Discovering Corona Renderer

I bought a powerful PC and I started looking around for another engine and stumbled across Corona Renderer mentioned on a message board. I remember being instantly struck with how easy it was to get a good looking image out of the engine with minimal effort. The integration with Cinema 4D is so flawless that anyone comfortable with Cinema can spend about a day playing with Corona and then just get into serious work.

Also, the way Corona handles light is unmatched to my eye, on top of being able to make tonal adjustments on the fly while rendering.

When I started working with Corona, the IR (Interactive Rendering) hadn’t been implemented yet for Cinema, but it didn’t matter – rendering directly in the native Picture Viewer was so fast, I could just keep iterating and tweaking and get to better results really fast. It was (and still is) able to handle any amount of geometry that I throw at it.

Now that the IR is fully in place, creating textures and looking at lighting options is extremely easy and fast and this is the most important part of my workflow – lighting. I think what sets the work apart, specific to my industry, is the attention paid to lighting (being married to a Broadway lighting designer helps here!) to help tell the story. Concept art for games and films have understood the importance of good lighting for a while, but we are just now catching up on a serious level.

Workflow

On a typical project, the Production Designer will send me anything ranging from a stick figure drawing on the back of a napkin, to a SketchUp model or a Photoshop collage, to just research and written descriptions.

From there I build out a first pass of everything in 3D in Vectorworks where I can generate a ground plan, centerline section, and front elevation of the set. Usually we have to build out the stadium: I get a stack of Revit or DWG files to sort through and pair down to a workable model. They are architectural drawings, so they have every light switch, HVAC, plumbing, concessions, etc.

Through Vectorworks I build a lean and mean model that can be used both for CADs and for rendering. I’ll spend a few days modelling this while the designer does his or her preliminary design work. I then move the model to Cinema 4D, throw in some very basic general lighting and add a white texture to everything and crank out some clay renders for the team to look over. With the Denoiser, I can let the renders cook for only 2-3 minutes and get a good enough result to pass along to the client.

From there we will tweak the design and refine it. I’ll build out real textures and start to put a more advanced lighting system into place. Then, another round of clay renders for review and notes – this can go on for a few days.

Once we are close to where the designer likes it – we will pick a camera POV for the hero shot. I typically screen share the designer into my system and we fly around together to pick the camera placement and lens size. Corona makes this very simple since I can get almost instant results for the designer to see as we are building the cameras – working with wireframe is one thing, but to be able to get mass and lighting going in just a few seconds gives the designers the confidence they need in where we are headed.

Official announcement video for Sam Smith’s The Thrill Of It All world tour

On a concert tour, we will do about 5 full color shots and then anywhere from 10-15 clay renders from different vantage points around the arena. With Material Override in Corona, I can easily switch between color and clay renders – though I usually will have separate lighting systems and camera exposures for these and switch back and forth between them.

The color shots give the producers and talent an overall idea of the look and vibe we are going for, and the clay renders let you see what the set is really going to look like and how it all works. Both have an equal value to us in the process. Revisions get updated in the Vectorworks master model to keep the CADs up to date and then pushed back into Cinema for the renders.

This process then can go on for weeks or even months where we are making design adjustments either for the needs of the show or the budget. On something like Superstar, the design process is happening alongside the rehearsal process with the actors, so it is not uncommon for the production designer to come back to us with a new set of needs every other day that we need to address.

That was also a show where we had to really look at camera angles and tight shots since it was being filmed for TV. Overall, Jason and I made about 120 renders over the course of 6 months. We would feed the master Vectorworks model to him and he would fly around and screen grab POVs that he liked for me to explore in full render or clay. There were three of us working on the modeling, and feeding CADs back to another team of 4 that would move the models into AutoCAD for drafted elevations and ground plans.

Jeff Hinchee built beautiful paint textures for us in Photoshop. I would unwrap the models and give him a template to work from. Melissa Shakun, the amazing Art Director, kept us all moving in the same direction.

Building the flexibility into the system for revisions as well as being an organized part of a larger pipeline is key to making it work. On most projects I work as a one man band, but I like being part of a team too and working together towards a common goal. Something like a Superbowl Halftime show takes about 5 months of design process, working on it almost daily: the Marquee in Times Square for Harry Potter was off and on for over a year.

Personal Work

So outside of the beautiful images that Corona Renderer can produce, it also really works well for me and my workflow. We have a 6 year old daughter and my wife has a very busy career, so it is common for me to leave my studio for the afternoon to get my daughter from school and then after she goes to bed, go back to finishing up work for the day. I have a large Windows tower at the office dedicated to Cinema4D, but at home, I just use a modest iMac, and Corona lets me jump between those two systems almost flawlessly. It might take more time to get to final image, but it can still handle whatever I throw at it – and this is where the denoiser and multi passes really shine for me.

I am a compulsive creator. I need to make something everyday. I can’t help it. Most of my large commercial projects can range from months to years. It can be hard to stay interested in revision number 438 after six months of work on a project.

Making something start to finish in a few hours gives me an outlet for that creative itch and it also lets me experiment with new techniques and methods which I can then fold back into my professional work. Corona Renderer makes this process fast and easy for me, and when I only have an hour or maybe two a day to squeeze in that creativity, this is essential. I can’t recommend enough making your own quick work to help you enhance your larger long term projects. The only way to get better is to make a large volume of work.

Production Designers think what I do is magic and Corona is a huge part of that. I think what the Render Legion developers do is magic, and I can’t wait for the official release of the Cinema version! There are still parts of the engine for me to explore – I haven’t even started in to the LightMix yet, but I imagine that will be a big one for me.

I’m looking forward to the nodal shading system as well. I want to say thank you to the team for all the work so far, and for the generosity to let us fully work with the product before it is officially released. Communication with the developers has been great and I look forward to seeing what comes next in Corona!

Thank you for reading, and I hope you have enjoyed the article – and that you will think about this process and what is involved next time you see a concert or live show that you enjoy.

Evan Alexander

Links:

www.evanalexander.com

www.ardizzonewest.com

www.christinejonesworks.com

www.jasonsherwooddesign.com

www.tribedesign.net

Stunning and really inspiring portfolio Evan